The Darkish Underbelly of Peer Review

/For the video version, click here.

I'm not sure when modern medical science was born. Maybe sometime in the enlightenment, or maybe when James Lind first gave those sick sailors some oranges to cure scurvy.

But peer-review, that most venerable and feared practice, is a much more modern institution. Until the mid-20th century, the editors of scientific journals were the gatekeepers to publication.

External peer-review changed that practice, presumably for the better. Experts in the field would be asked to comment on a manuscript and recommend changes or new experiments in an effort to maintain a high-quality of publication.

Peer-review, and I have quite a bit of experience with this, is often helpful, sometimes hostile, and results in many bruised egos.

I had this statement greet me as the first line of a recent review of one of my manuscripts:

So you're saying there's a chance.

But by and large, I've benefited from peer review. My papers are better because someone tore them to shreds. That's part of what makes science cool.

But a research letter appearing in the Journal of the American Medical Association takes a bit of the bloom off this rose by demonstrating that peer-reviewers are people too.

Here's what you need to know:

There's a journal called Clinical Orthopaedics and Related Research which is a respectable repository of ortho studies. That journal engages in both single-blind and double-blind peer review. Single-blind is the standard. The peer-reviewers know who wrote the paper, but the authors don't know who did the peer-review. This prevents hurt feelings and drinks in people's faces at academic conferences.

Double-blind review means the peer-reviewers don't know who the authors are either. The science stands on its own.

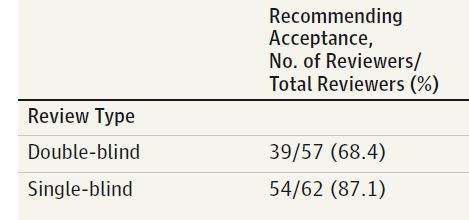

Ok, so researchers wrote a fake ortho study with several minor to moderate errors in it. The fake "authors" were listed as two very well-regarded orthopedic scientists. Some peer-reviewers were randomly assigned to a single-blind review, another group to double-blind review. What do you think the acceptance rates were like?

You got it. When the reviewers knew who the authors were, the acceptance rate was 87%. When they didn't, the acceptance rate was 68%.

In other words, one extra paper out of five will be accepted based on the cachet of its authors alone.

Now, as an author with very little cachet, this should upset me, but I have trouble getting angry about this. The thing is, maybe a well-established researcher with a long track record of quality, scholarly research should get a bit of a bonus in terms of acceptance rates.

See, there is a lot of stuff that never makes it into a paper. Things like the quality of the assays performed in the lab, the experience of the technicians and so on. Individuals with well-established, long-running successful labs do these things well and deserve a bit of credit.

And hey, if you want to level the playing field, how about you let the young upstarts get away with a "major revision" instead of an outright rejection on that first round. It seems fair to me, but I'm not sure how reviewer three will feel about it.