Spray this up your nose and you'll take more risks in social situations. No - it's not that.

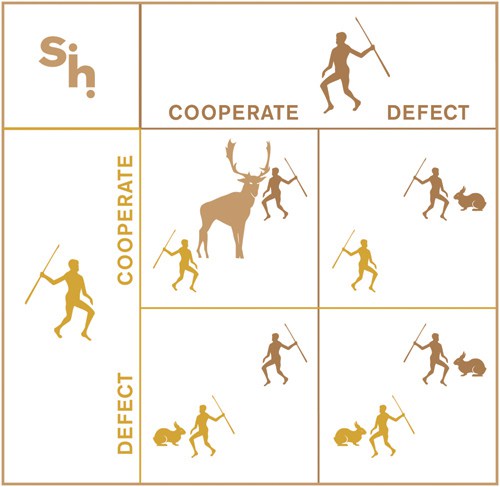

/For the video version of this post, click here. You and a stranger are sitting, unable to see each other, in small cubicles, and 200 dollars is at stake. Its called the stag hunt game. You each can choose to hunt stag or rabbit. Hunting a stag requires two people, but gives you that big $200 payoff. Choosing rabbit gets you $90 if you hunt alone or $160 if your partner chooses rabbit too. Get it? The risky choice requires cooperation.

That a single intranasal shot of vasopressin could affect this decision seems crazy, but nevertheless that's what researchers publishing in the Proceedings of the National Academy of Sciences found.

Now, I’m a kidney doctor. To me, vasopressin is the hormone that makes you concentrate your urine. But neuroscientists have found that vasopressin exerts a diverse set of effects in the brain – stimulating social affiliation, aggression, monogamy, and among men paternal behaviors.

The experiment took 59 healthy men and randomized them to receive 40 units of vasopressin or placebo intranasally. They then played the stag hunt game. Those who got the vasopressin were significantly more likely to go all in – choose the stag option, then those who got placebo.

The elegant part of the experiment was the way the researchers tried to pin down exactly why this was happening. It wasn't just that vasopressin made you more tolerant of risk. They proved this by having the men choose between a high-risk high-reward and low-risk low-reward lottery game. Vasopressin had no effect. It wasn't that vasopressin made you feel euphoric, or wakeful, or calm – self-reported measures of those factors didn't change.

Vasopressin didn't make you more trusting. When asked whether they thought their silent partner would choose "stag" over "rabbit", the presence of vasopressin didn't change the answer at all. No, the perception of risk didn't really change, just the willingness to participate in this very specific type of risky behavior which the authors refer to as "risky cooperative behavior".

Risky cooperative behaviors are basically anything that you do that requires you to trust that other people will also do for mutual benefit. In short – vasopressin may be the hormone that gave rise to modern society.

How does it work? Well, a simultaneous fMRI study demonstrated decreased activity in the dorsolateral prefrontal cortex among those who got vasopressin. This part of the brain has roles in risk-inhibition, high-level planning, and behavioral inhibition, so vasopressin downregulating this territory makes some sense when you look at the outcome.

But the truth is that an understanding of myriad neuro-electro-chemico-hormonal influences into choosing whether to hunt stag or rabbit is beyond the scope of this study. Still, for a believer in free-will such as myself, studies like this are always a stark reminder that it isn't necessarily clear who is in the driver's seat when we make those risky decisions.